Sometimes, the best-laid plans have unintended consequences.

Take, for example, in 1763, when James Watt was working in the basement of a building at the University of Glasgow. He was fixing one of the first steam engines produced to draw water from deep within coal mines.

Watt discovered the operation of this early steam engine was inefficient. Energy was lost due to poor construction.

He developed a condensation chamber that attached to the engine to trap excess steam, keeping the engine cylinder hot and operating more efficiently.

The result spurred the start of the Industrial Revolution as the steam engine became an integral part of manufacturing and transportation in the 19th century.

Watt’s invention meant it took less coal to operate these engines. That made it cheaper for industries to adopt the technology and produce goods in larger quantities.

While Watt’s invention eventually led to semi-automated factories around the world, it also created an unintended consequence that we can see today.

Coal and the Jevons Paradox

The main benefit of Watt’s addition to the steam engine was efficiency.

It took less coal to equal or even improve overall output.

In theory, this would have led to smaller consumption of one of the world’s leading energy sources of the time … but it didn’t.

What actually happened was the steam engine sparked a coal boom. There was a massive jump in the consumption of coal — a 6,800% increase from 1800 to 1900.

In 1865, British economist William Stanley Jevons warned that the efficiency and usage of the steam engine was depleting coal resources in the United Kingdom.

This became known as the Jevons Paradox — where efficient technologies can sometimes increase the demand for a resource.

And this paradox can explain a problem we’re facing today.

AI’s Future Power Drain

Accelerated computing and artificial intelligence (AI) are being used to increase the efficiency of data centers around the world.

AI-powered analysis can forecast demand patterns, energy consumption and equipment failures to help operators allocate resources more effectively.

AI also helps data centers optimize storage solutions based on usage patterns and can automate data maintenance, detecting changes in data records and automatically updating them.

As a result, new data centers are popping up around the world.

The problem, like Watt’s addition to the steam engine, is that AI consumes power at an incredibly fast rate.

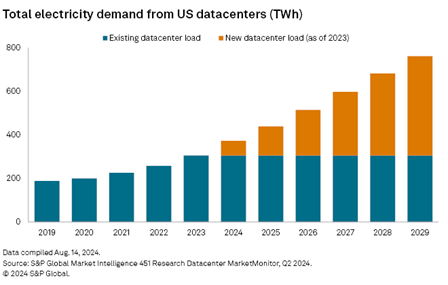

In 2023, data centers in the U.S. used around 300 terawatt-hours of electricity. With new data centers coming online, coupled with the existing load, that usage is estimated to jump to around 800 terawatt-hours by 2029.

That’s a 167% increase in electricity demand for data centers in the U.S. alone in six years.

And 800 terawatt-hours of electricity is enough to cool 400 million homes for an entire year!

Because aging fossil-fuel plants are already operating longer than anticipated … or being reactivated to meet this data center power demand … we need new solutions to satisfy the power thirst.

Fueling the AI Power Surge

The amount of power to scale up AI and data centers is enormous.

Just as steam power eventually pivoted to fossil fuels, we’ll have to invest in new technologies that create more power that is mission-critical to AI.

The bright spot here is that one of those new technologies is already in the works … and no one outside of Big Tech (aka Nvidia, Microsoft and Google) is paying any attention to it.

After extensive research into a solution for this modern-day Jevons Paradox, Adam O’Dell has uncovered this technology that has already been financed by some of Big Tech’s most prominent players.

But we’re taking down Adam’s urgent message after the U.S. stock market closes today…

When Nvidia’s quarterly earnings come out after the closing bell, the entire AI landscape will change.

Find out about this incredible technology now … because without it, AI will come to a standstill.

Safe trading,

Matt Clark, CMSA®

Research Analyst, Money & Markets